Chinese Whispers and Digital Ghosts: Securing the AI Ecosystem with A2A

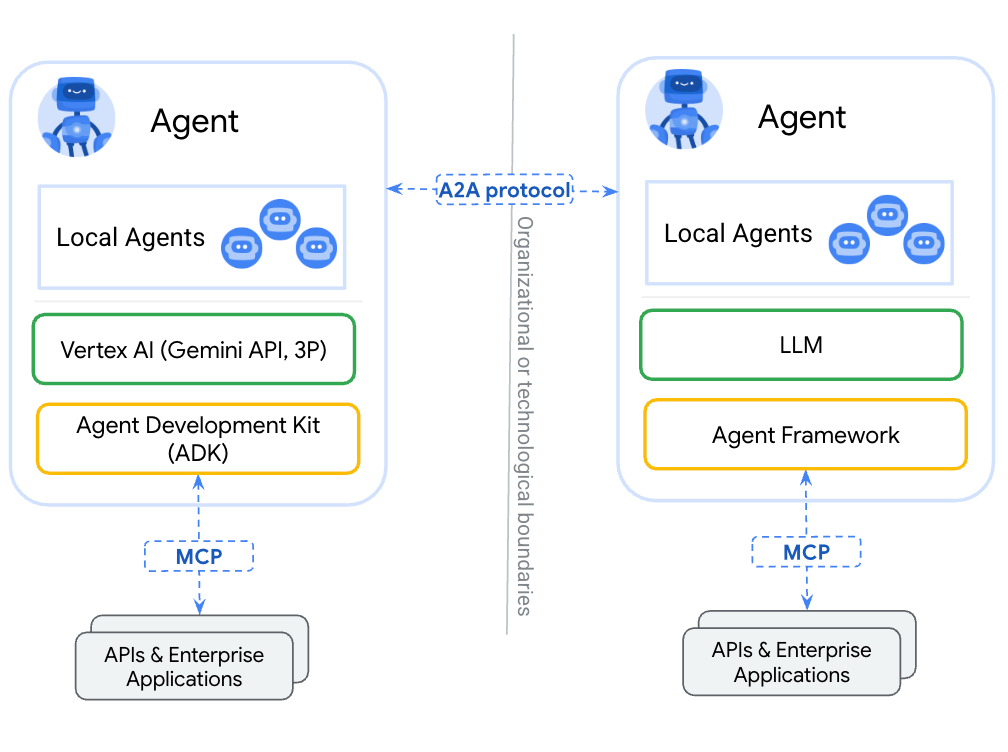

In the previous article we covered MCP servers, the USB-C of the AI Agents (protocol that allows tools access). Today we are moving beyond individual agents to complex networks where AI systems collaborate. Here is where we introduce Google's Agent2Agent (A2A) protocol, launched in April 2025, is at the forefront of this shift, enabling seamless and secure communication between diverse AI agents. While Model Context Protocol (MCP) empowers individual agents, A2A creates the essential collaborative ecosystem for these agents to work together on complex tasks, acting as the "network protocol" for AI.

The Foundation of Secure Inter-Agent Communication

A2A addresses the critical "horizontal integration" problem: how do autonomous AI systems, built by different teams and technologies, communicate effectively and securely? Developed with over 60 technology partners, A2A establishes universal standards for agent interoperability, stemming from Google's real-world experience in deploying large-scale, multi-agent enterprise systems.

A2A is complementary to MCP, separating inter-agent communication (A2A) from intra-agent capability invocation (MCP). I believe some people still see A2A to compete with MCP, but you can see that they are cumplimentary and serve different purposes.

This modular design enhances security and maintainability, allowing agents to collaborate without needing shared memory or direct access to internal tools. A key security feature is "opaque execution," which allows agents to work together while maintaining proprietary intellectual property and strong trust boundaries. This is vital in enterprise environments where agents may be owned by different organizations, ensuring sensitive internal operations remain private.

Enterprise-Grade Security by Design

Ok, let´s move on the interesting part, luckily A2A protocol was designed with security in mind, which was not the case of MCP as we’ve seen in the previous article. The key aspects are:

- Standardized Communication: A2A uses JSON-RPC 2.0 over HTTP(S), integrating seamlessly with existing IT infrastructure and providing robust security features.

- Authentication & Authorization: Instead of proprietary solutions, A2A leverages standard web mechanisms. It mandates HTTPS for all production communications and supports multiple schemes like OAuth2, API keys, and JWT tokens, all advertised via the agent's "Agent Card" metadata. This ensures integration with existing enterprise identity management systems and adherence to high security standards.

- Role-Based Access Control (RBAC): A2A's granular authorization capabilities directly facilitate RBAC within agent interactions. Organizations can define roles and permissions for agents, ensuring that only authorized agents can access specific skills or data, thereby enforcing least privilege.

- Transport-Level Security: Mandatory HTTPS and modern TLS versions (1.2 or higher) with industry-standard cipher suites form the backbone of A2A's security, preventing man-in-the-middle attacks and ensuring compatibility with firewalls and intrusion detection systems.

- Delegated Authentication Model: A2A separates authentication from payload content, simplifying security auditing and enabling the use of existing enterprise authentication infrastructure without modifying agent logic.

- Data Privacy & Confidentiality: The protocol emphasizes data minimization principles and includes compliance considerations for regulations like GDPR, CCPA, and HIPAA, guiding organizations in regulated industries. A2A's design helps organizations align with data residency, consent management, and data sharing requirements, particularly for sensitive data flowing between agents.

Security Challenges and Future Implications

Great, does it mean we are covered and can finally relax? While A2A offers robust security, its adoption introduces new considerations and challenges:

- Expanded Attack Surfaces: The distributed nature of A2A increases attack surfaces, requiring enhanced monitoring and threat detection for multi-agent systems. A2A's emphasis on opaque execution and clear trust boundaries helps mitigate lateral movement, as a compromised agent's access is typically limited to its defined permissions, preventing direct infiltration of other agents' internal operations.

- Opaque Execution Monitoring: The privacy-preserving "opaque execution" model necessitates new security monitoring approaches that can assess agent behavior and collaboration patterns without violating privacy boundaries. This emphasizes the need for robust agent observability solutions that can capture high-level interaction logs and metadata while respecting internal operational privacy.

- Auditability and Logging: Comprehensive logging and audit trails are paramount for A2A interactions. Organizations need to monitor who accessed what, when, and from which agent to ensure accountability and detect anomalies. A2A's structured communication provides a foundation for detailed logging of agent interactions, enabling clearer oversight. This could be tied with the previous point.

- Authentication and Authorization Complexity: Managing consistent security policies across multiple agents operating under different security domains and trust relationships requires careful coordination and robust governance frameworks. The Non-Human identities proliferation is going to be interesting and challenging.

- Supply Chain Security Risks: The use of third-party A2A agents or external networks introduces supply chain risks, similar to software dependencies. This demands agent reputation systems, security assessment frameworks, and continuous monitoring.

- Complex Data Privacy: Information flow across multiple agents and organizational boundaries requires comprehensive data governance frameworks to track data, enforce retention, and ensure regulatory compliance.

- Incident Response for Multi-Agent Systems: Traditional incident response plans must be adapted for distributed AI environments. This includes establishing clear protocols for identifying compromised agents, isolating them from the network, and investigating the scope of a breach within a collaborative agent ecosystem.

- Future of Decentralized Identity (DID) for Agents: While A2A currently relies on standard web authentication, the future may see Decentralized Identity (DID) solutions play a significant role. DIDs could provide agents with self-sovereign identities and verifiable credentials, enhancing their security, trust, and interoperability in even more dynamic and distributed environments.

- Agent Lifecycle Governance: A significant challenge lies in managing the lifecycle of autonomous agents. Just as with human employees, where ensuring timely access revocation after a departure is critical, organizations must establish stringent governance for agents. Autonomous agents, once deployed, can continue to operate and access network resources even after contracts, partnerships, or projects conclude. This necessitates robust policies for agent deactivation, credential revocation, and system removal to prevent unauthorized or unintended ongoing operations, effectively 'offboarding' agents when their purpose is fulfilled. Many of you are familiar with the pains of the Joiners Leavers Move (JLM) processes in companies, accounts left active after employee leaves the company, contractors access active long after contract expired, etc. We also saw the problems of abandoned Cloud resources and assets, like S3 buckets that are not used anymore left in production, servers running, expending money and increasing the attack surface, that were forgotten in the migration or the lack of service lifecycle governnace. Now just imagine this problem but with autonomous agents. 😅

Security threats for Multi agents using A2A protocol:

Let´s dive on some of the main threats we have in multi agents environments:

Agent Identity Deception / Typosquatting

- Scenario: A malicious actor registers an AI agent and its associated Agent Card metadata with an identity (name, domain, listed capabilities) that is deceptively similar to a legitimate, trusted agent within an A2A ecosystem. This could involve "typosquatting" (e.g., using

finance-rep0rting-agent.cominstead offinance-reporting-agent.com) or simply choosing a very similar, yet distinct, name (e.g., "DataAnalyzerAgent" instead of "DataAnalysisAgent"). While A2A mandates HTTPS and robust authentication (OAuth2, API keys, JWT), these mechanisms verify the bearer of the credentials for a given domain/identity. They don't inherently verify that the domain/identity itself is the one intended by the communicating agent. - Problem: If a client agent, or another legitimate agent, mistakenly discovers and resolves the imposter's

/.well-known/agent.jsonor uses its endpoint due to misconfiguration, a typo in a configuration file, or a sophisticated social engineering attack, it will attempt to communicate with the imposter. The imposter agent can present valid TLS certificates for its domain and authenticate via A2A's supported mechanisms, thus appearing legitimate at the protocol level. - Abuse: The imposter agent can then:

- Intercept Sensitive Data: Receive tasks and data intended for the legitimate agent.

- Exfiltrate Information: Capture and forward this sensitive data to an attacker-controlled endpoint.

- Inject Malicious Instructions: Respond to client agents with fabricated or malicious task outcomes, or send malicious instructions disguised as legitimate A2A messages to other agents.

- Direct Unauthorized Actions: Trick legitimate agents into performing actions (e.g., modifying records, transferring funds) based on false instructions, believing they are interacting with a trusted peer.

Context Poisoning / Indirect Prompt Injection (via A2A Interactions):

- Scenario: A malicious agent, or a compromised legitimate agent, sends a seemingly innocuous A2A message to a peer agent. This message's payload, while appearing valid for the task, contains hidden, malicious instructions (e.g., embedded in a task description, or a request for "input-required" state update). The receiving agent's underlying AI model or logic then interprets and executes these hidden instructions.

- Abuse: This can lead to the receiving agent divulging sensitive information it shouldn't, performing unintended actions (e.g., deleting critical files, making unauthorized transactions), or compromising other systems it has access to. For example, a malicious agent could send a task to a "script-analyzer" agent that, once analyzed, leads the analyzer to inadvertently reveal system credentials or trigger a deployment in production.

Data Exfiltration through Legitimate Channels:

- Scenario: A compromised or malicious A2A agent, with access to sensitive data (e.g., customer information, financial records, intellectual property), is designed to subtly exfiltrate this data. It uses A2A's legitimate communication mechanisms (e.g., task results, status updates, or even by embedding data in seemingly innocuous fields within JSON-RPC payloads) to send small, encrypted chunks of sensitive information to an attacker-controlled external endpoint.

- Abuse: This allows attackers to bypass traditional data loss prevention (DLP) systems that might not be configured to inspect A2A traffic patterns for subtle data leakage. The data is transferred stealthily, blended with normal agent communication, making detection challenging.

Tool Misuse / Privilege Escalation via Agent Chaining / Chinese whispering attack:

- Scenario: An attacker gains control over a lower-privileged A2A agent. They then exploit the trust relationships or the interaction flexibility within the A2A ecosystem to chain commands across multiple agents, ultimately elevating privileges or misusing tools. For example, a low-privilege agent might ask another agent (that has legitimate access to a critical tool) to perform an action that, in combination with the attacker's input, becomes malicious.

- Abuse: This can lead to unauthorized access to sensitive systems or data, execution of arbitrary code, or significant business disruption. A compromised agent designed for "reporting" might be tricked into initiating a "data deletion" task through another agent it collaborates with, exploiting a chain of legitimate but misdirected actions.

Will we see the Chinese whispering attack in multi agents environments? The "Chinese whispers" (or "Telephone game") attack in multi-agent environments is a serious concern, and can happen with this conditions:

- Semantic Drift / Misinformation Propagation:

- Scenario: A legitimate piece of information or instruction is passed from Agent A to Agent B, then to Agent C, and so on. At each "hop," due to slight misinterpretations by the AI model, ambiguous phrasing, contextual misunderstandings, or even subtle adversarial manipulation (e.g., a mild "prompt injection" that doesn't fully compromise but subtly biases), the information can subtly change.

- Abuse: Over several iterations, the original intent or data can become significantly distorted, leading to:

- Incorrect decisions: Agents making choices based on fundamentally flawed information.

- Misleading reports: Generated summaries or analyses that no longer reflect the true data.

- Malicious outcomes: What started as a benign request could, through a chain of slight misinterpretations, evolve into a harmful action if the context shifts enough (e.g., a request to "archive" old data becomes a request to "delete" it, or to "transfer" it to an external, unauthorized location). This is a form of degradation of integrity.

- Vulnerability Amplification:

- Scenario: A subtle vulnerability or bias in one agent (e.g., a slight tendency to be agreeable to certain types of input, or a minor susceptibility to a particular indirect prompt injection) gets amplified as its output becomes the input for subsequent agents.

- Abuse: A minor misinterpretation in Agent A might lead Agent B to make a slightly larger error, which then causes Agent C to take a severely incorrect action. This cascade can turn minor flaws into major security incidents or operational failures.

Denial of Service (DoS) / Resource Exhaustion:

- Scenario: A malicious A2A agent, or a compromised agent, floods a target A2A agent or the entire A2A network with a high volume of requests, complex tasks, or large data payloads. This can leverage A2A's asynchronous capabilities and long-running task support.

- Abuse: This overwhelms the target agent's processing capabilities, consumes excessive resources (CPU, memory, network bandwidth), or fills up task queues, leading to degraded performance, unresponsiveness, or complete operational outage for legitimate agents and the services they support. This can be particularly effective if combined with resource-intensive tasks, forcing the victim agent to perform complex computations or data transfers.

Interestingly despite that the protocol have Security principles, and measures, many of the attacks in the MCP servers side, are still possible in multi agents environments using A2A protocol.

By providing a secure and standardized communication framework, A2A enables collaborative AI ecosystems that can tackle complex real-world challenges, but at the same time introduces a new set of challenges for Cybersecurity teams, and attackers have a new target to abuse and find vulnerabilities. Exciting times ahead.

Chris

Protocol Draft papel: https://github.com/google-a2a/A2A