From Vibe Coding to Software Supply Chain Nightmares

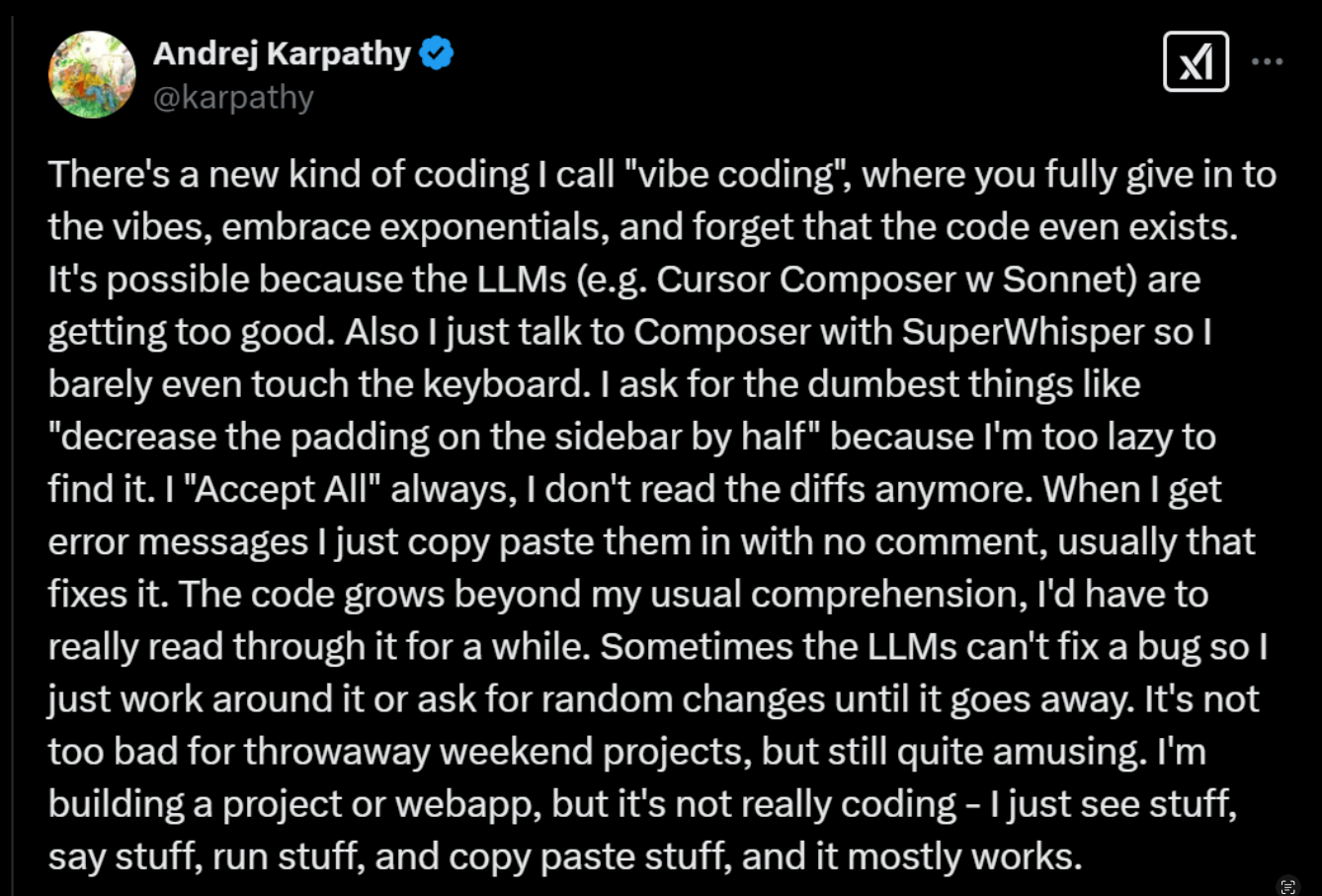

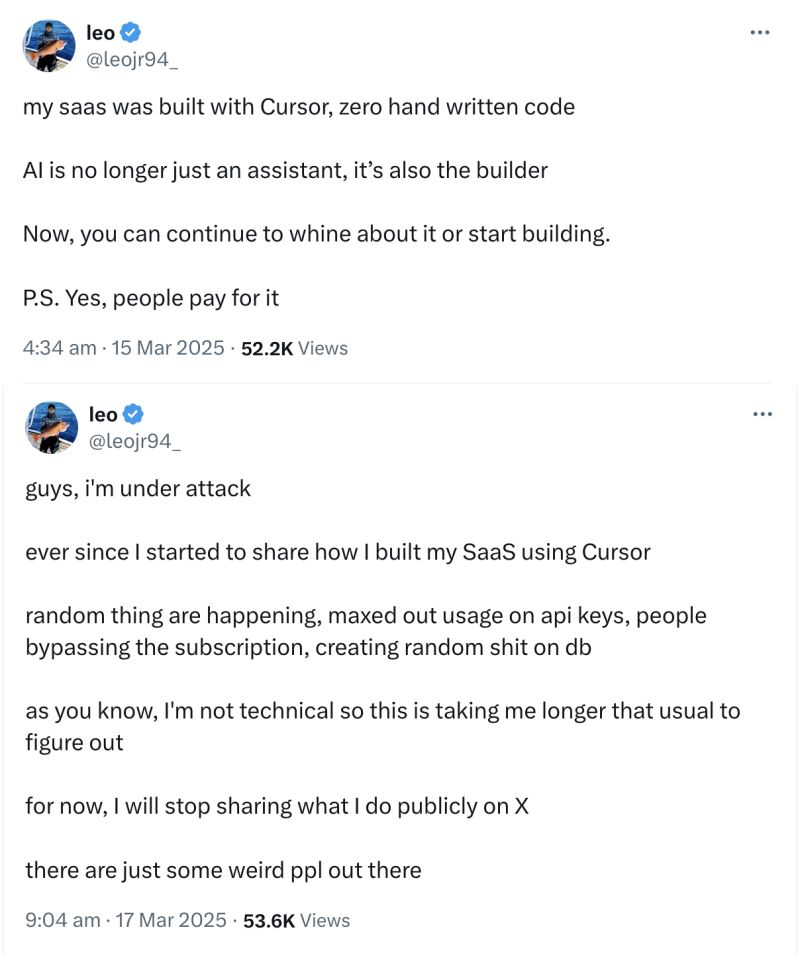

We're witnessing what some call "Vibe coding" — developers with minimal technical experience leveraging AI tools (Cursor,Windsurf, Cline, Bolt, Replit, Vercel V0, etc.) to build applications that would have previously required years of expertise. While this democratization of coding is definitely revolutionary, recent security incidents highlight a critical security problem in this new paradigm. You can love it or hate it, this is already here...

When Convenience meets Vulnerability

At the same time, North Korean threat actor Lazarus hackers successfully infected hundreds of systems by distributing malicious code through npm packages. Some of the packages, with innocent-sounding names like:

- s-buffer-validator – Malicious package mimicking the popular is-buffer library to steal credentials.

- yoojae-validator – Fake validation library used to extract sensitive data from infected systems.

- event-handle-package – Disguised as an event-handling tool but deploys a backdoor for remote access.

- array-empty-validator – Fraudulent package designed to collect system and browser credentials.

- react-event-dependency – Poses as a React utility but executes malware to compromise developer environments.

- auth-validator – Mimics authentication validation tools to steal login credentials and API keys.

(some of these packages are still active at the time of writing this article)

This attack is part of a broader trend: sophisticated threat actors are increasingly targeting software supply chains rather than end/individual applications. The logic is simple – why attempt to breach thousands of individual applications when compromising a single popular dependency can provide access to all of them? The 2020 SolarWinds attack, the 2021 Kaseya incident, and now these npm compromises demonstrate how attackers have shifted from targeting the castle to poisoning the wells that supply it. For nation-state actors like Lazarus, these attacks offer an asymmetric advantage (bang for the buck): minimal effort for maximum impact.

Another recent vector was discovered by a group of security researchers, where LLM models generate references to non-existent code packages. Attackers didn't loose time, and started identifying those packages and create backdoored packages with those names to infect programs using that AI generated code.

This supply chain focus shift creates a perfect storm when combined with two other trends:

- The increasing reliance on open-source packages and dependencies

- The rising population of AI-assisted developers who may lack the security background to identify threats

New (old?) Security Gaps

Traditional developers build expertise through years of experience, gradually developing an intuition for security risks. More experienced ones learn to be suspicious of third-party code and to investigate dependencies before incorporation. Most, supported by company process or tooling to manage the security of these dependencies.

AI-assisted developers, by contrast, often bypass this learning curve. When an AI suggests including a package to solve a problem, the natural impulse is to trust that recommendation without additional scrutiny. After all, if you're relying on AI to write code you don't fully understand, you're also likely to trust its judgment on dependencies and vibe the security as well. And this is where the problem lays.

The reality is that AI coding assistants currently excel at producing functional code but remain limited in their ability to fully contextualize security implications. They might flag known vulnerabilities but can't replicate the awareness that experienced developers have. Current assistants dont have Dependency scanning capabilities, and rely on other plugins to be enabled in the IDE to deal with this aspect.

AI coding assistant tools need to prioritize security alongside code generation, integrating contextual warnings and explanations about potential security implications.

Another big area that will be amplified by Vibe coding is secrets sprawl and leaks, people is not aware of what keys/secrets are being exposed, and then they are suffering:

Essential Open Source Tools for Dependency security and secret scanning

For teams embracing AI-assisted development, incorporating robust dependency scanning and secret scanning into your workflow is fundamental. Fortunately, several powerful open source tools can help mitigate risks, that can run in your IDE or CI/CD workflows:

IDE:

- Snyk Open Source: While Snyk offers commercial options, their open source scanning tool provides comprehensive vulnerability detection and offers actionable remediation advice—particularly valuable for developers learning about security concerns.

- npm-audit: Built into the npm CLI, this tool provides a first line of defense specifically for JavaScript projects by scanning your package.json against the npm security advisory database.

CI/CD:

- Trivy: This comprehensive security scanner not only examines dependencies but also container images and infrastructure as code, providing a broader security perspective that's especially relevant as projects scale.

- OWASP Dependency-Check: This versatile tool identifies known vulnerabilities in project dependencies across multiple languages and package managers. Its straightforward integration with CI/CD pipelines makes it ideal for teams transitioning to AI-assisted development.

- Dependabot: Now integrated with GitHub, Dependabot automatically creates pull requests to update dependencies with known vulnerabilities, adding a layer of automation to security maintenance.

- Trufflehog: TruffleHog is the most powerful secrets Discovery, Classification, Validation, and Analysis tool. In this context secret refers to a credential a machine uses to authenticate itself to another machine. This includes API keys, database passwords, private encryption keys, and more...

- Gitleaks: Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it.

There are many more tools and projects around Supply chain security, this is only a subset around the context of individuals. For more content check Open Source Security Foundation

For small teams using AI coding assistants, I recommend implementing at least one Dependency scanning tools. Ideally two, this redundancy helps catch vulnerabilities that might slip through a single tool's detection and provides a more comprehensive security posture. (I.E Dependabot in GH, plus Trivy in the CI/CD or the IDE)

The scanning tools work well against known malicious packages that have been already identified and cataloged in security databases. For instance, npm-audit scans dependencies against the npm security advisory database, which is effective for detecting previously reported issues. However, zero-day backdoors or sophisticated implants might go undetected until they’re publicly disclosed.

For detecting sophisticated backdoors we require additional layers focused on behavioral analysis, advanced static analysis (many commercial products offer this), and supply chain verification, private artifacts registries/proxies, which some of this functionalities are found in commercial products, or difficult to implement with open source software or too much for individuals and small teams.

Until we get better tools and improvements in AI coding agents and security tools, developers will have to rely on awareness and processes:

- Low-code developers and team using AI assisted development tools need baseline security awareness focused specifically on dependency management and understanding attack vectors. Content should cover common attack vectors like typosquatting, masquerading, dependency confusion, and dependency hijacking.

- Organizations need clear policies about AI-generated code review and third-party package approval processes and software supply chain management.

A Balanced Path Forward

The growth of coding through AI represents a tremendous opportunity to unlock innovation. The solution isn't to abandon these tools but to evolve our approach to security in response to this new reality.

As we move forward, security awareness and controls must be integrated into the heart of AI coding tools, not treated as an optional add-on.

As a Security professional I want to see AI Coding assistants/agents that helps developers build applications while understanding the security implications of every library they import and every API they call, embedding the security awereness as part of the AI development process. We wont solve this by having a separate process.

Until then we will see an increase of vulnerable AI generated applications and a lot of nightmares for security teams.

Have a good sleep..