SIN-01 Security and Innovation Newsletter Jan 20th 2025

Welcome to the first issue of "Security and Innovation notes" newsletter , where I curate bi-weekly insights on cybersecurity and innovation.

Welcome to the first issue of "Security and Innovation notes" newsletter , where I curate bi-weekly insights on cybersecurity and innovation. Expect a thoughtfully selected mix of articles, from AI advancements to Cybersecurity news and trends, saving you time and introducing you to fresh perspectives.

Security projects and articles:

🛡️🤖 Scaling Threat Modeling with AI: This is a great project with a lot of valuable insights for people that want to start solving real problems in Product security and Appsec. This project performs a Threat model, Security design documentation and Attack trees on code repositories. This could be a great starting approach for product security teams that needs ensure coverage at scale, or when you join a new company and need to understand what is in place quickly Link

🛡️🤖 Lessons from Red Teaming 100 Generative AI Products : Microsoft's red team evaluated over 100 generative AI products to understand their safety and security risks. They identified eight key lessons, emphasizing the importance of human judgment alongside automation in testing these systems. The team also shared their findings to help others improve AI risk assessments and address real-world vulnerabilities. Original Paper - Nicer Ebook

🛡️🤖 Tracking cloud-fluent threat actors - Behavioural cloud IOCs: Nice couple of articles from Wiz, on Strategies for tracking and defending against malicious activity and threats in the cloud using atomic indicators of compromise (IOCs). Part 1 and Part 2

🛡️🤖 Agents to automate Pentest (TARS): TARS is an attempt towards trying to automate parts of cybersecurity penetration testing using AI agents, it's an early stage process but interesting to explore Agents in this domain. Based on CrewAI Framework Link

🛡️🤖 Reaper: "Reaper by Ghost Security is a modern, lightweight, and deadly effective open-source application security testing framework—engineered by humans and primed for AI. Reaper slashes through the complexities of app security testing, bringing together reconnaissance, request proxying, request tampering/replay, active testing, vulnerability validation, live collaboration, and reporting into a killer workflow." Looks like a potential future strong player in the Opensource App testing Link

🤖🤖 AI Agents spotlight:

🤖 Excellent "Agents" paper from Google: this whitepaper discusses how Generative AI agents enhance language models by using tools to access real-time information and perform complex tasks. It highlights the importance of an orchestration layer that guides the agent's reasoning and decision-making processes. Additionally, it explains how extensions and functions help agents interact with external systems, making them more effective in various applications.

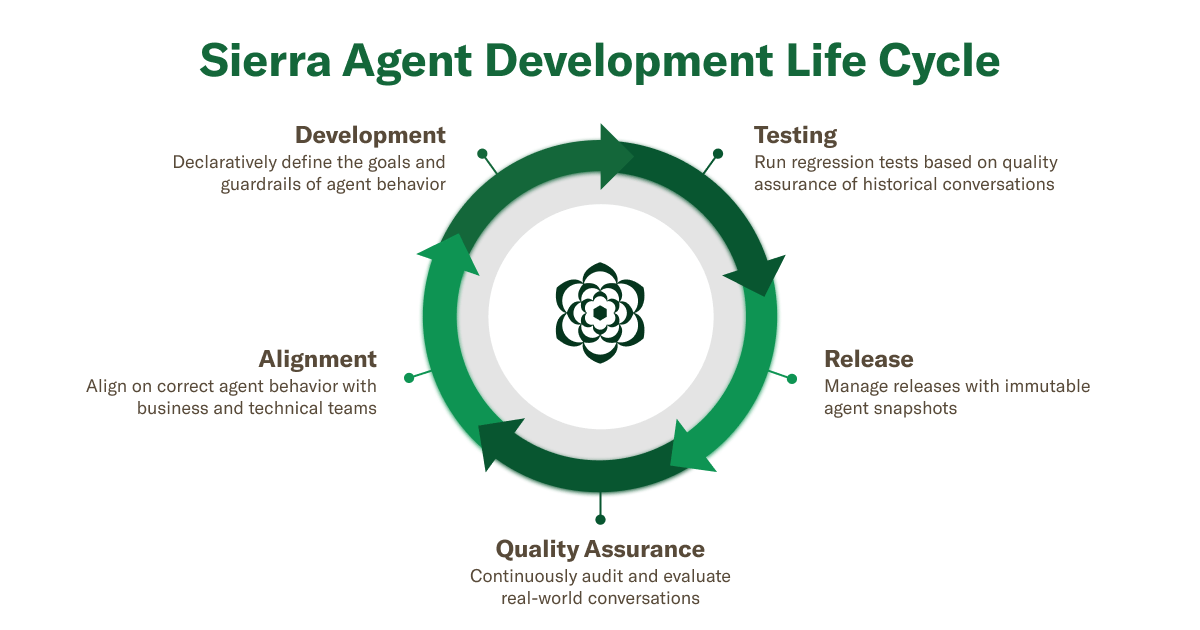

🤖🛡️ Here is a very interesting article on the AI Agents development life cycle and implications to the different phases of a project. Sierra explores the revolutionary landscape of AI agent development, likening it to the beginning of the Internet era.

The transition from traditional, deterministic software to complex, goal-driven agents reliant on non-deterministic language models, new challenges arise—particularly in terms of security and upgrade management. This evolution needs a rethinking of how we secure agents, echoing the need for robust strategies similar to those we employ for conventional software.

🤖 Anthropic how to build an effecivet Agent : One of the key learnings is when to use Agents→ "When more complexity is warranted, workflows offer predictability and consistency for well-defined tasks, whereas agents are the better option when flexibility and model-driven decision-making are needed at scale. For many applications, however, optimizing single LLM calls with retrieval and in-context examples is usually enough."

Some Anthropic Agents Cookbooks (Some examples on how Anthropic see agents):

🤖 If you are thinking on exploring Agents, these are the top frameworks used to build AI Multi Agents systems at the moment, I provide some good short courses to get you up to speed:

- CrewAI:

- Multi AI Agent Systems with crewAI Deeplearning.ai

- Practical Multi AI Agents and Advanced Use Cases with crewAI Deeplearning.ai

- Autogen:

- AI Agentic Design patterns with Autogen Deeplearning.ai

- Notebooks

- AG2 (fork of Autogen different team)

- Langraph:

- AI Agents in Langraph: Deeplearning.ai

- Introduction to Langraph by Langchain Academy

- Examples

Finally there is a new framework that looks promising and simple to use, "Smolagents" by Huggingface, you can sign up in the upcoming AI Agents course here

🤖 AI IDEs:

Are you wondering what is the best option to start using for AI coding? I have been trying different IDE and Agents for prototyping, and so far the one that worked best for me is Windsurf and Cline: Windsurf is cheaper than paying for Claude Sonnet 3.5 API credits. Last prototype I was able to create a fully working app with Fastapi backend, ReactJS frontend and a browser plugin, and all worked in the first go.

Windsurf is a VSCODE fork with focus in native AI functionalities.

Other alternatives I used and recommend:

- https://github.com/cline/cline It's a VSCODE plugin that uses primarily Claude Sonnet 3.5 API. It's pretty good but API cost goes up quickly.

- https://www.cursor.com/ Another VSCODE fork product with AI native functionalities. Pretty similar to Windsurf.

- https://sourcegraph.com/cody Finally.. another VSCODE plugin from sourcegraph one of the first assistants with the philosophy of adapting to the best LLM for the job as they keep evolving.

These are changing very fast, and there are improvements being added very frequently, so it is very difficult to say which one is better. It will boil down to what you feel works better to your way of working and what you need from these tools.

🤖 LLMs:

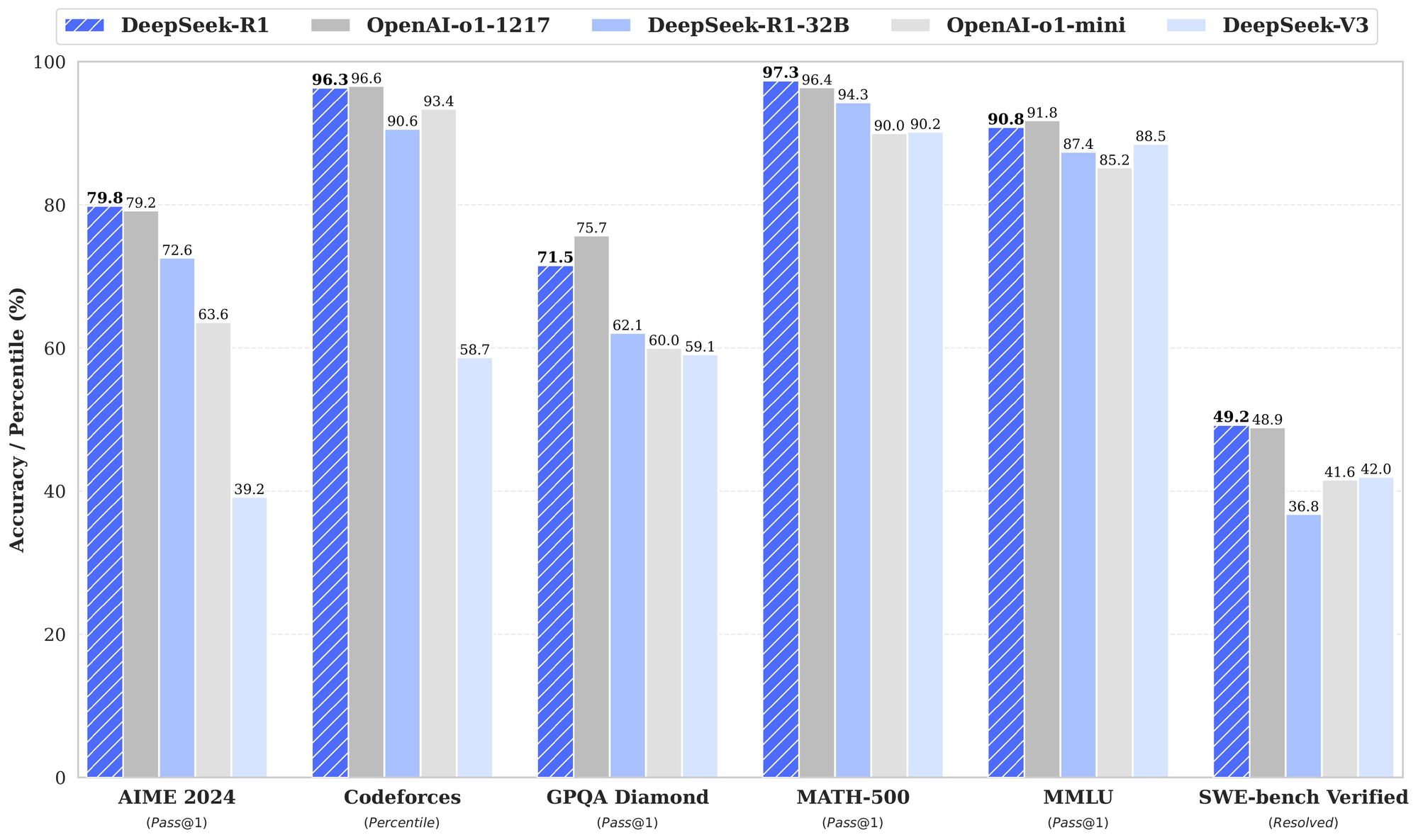

Deepseek just released their reasoning model "R1" that competes with OpenAI O1.

R1 matches or surpasses O1 in a variety of different benchmarks. To look at these benchmarks, check out their GitHub page. Additionally, from my experience, it’s faster, cheaper, and has comparable accuracy. And R1 it’s MUCH cheaper than O1.

- R1: $0.55/M input tokens | $2.19/M output tokens

- O1: $15.00/M input tokens | $60.00/M output tokens

You can try Deepseek R1 in https://chat.deepseek.com and download local model from https://huggingface.co/deepseek-ai/DeepSeek-R1

That's all for now, please subscribe if you want to get this Newsletter in your inbox twice a month.

Chris