SIN-04 Security and Innovation Newsletter March 7th 2025

Hello Security and Innovation enthusiasts! Welcome back to the third exciting edition of the 'Security and Innovation' Newsletter. Get ready to continue diving into the world of AI security in this issue. We've packed it with research and fresh stuff you won't want to miss. Please share it with colleagues and friends to help our community grow. Happy reading!

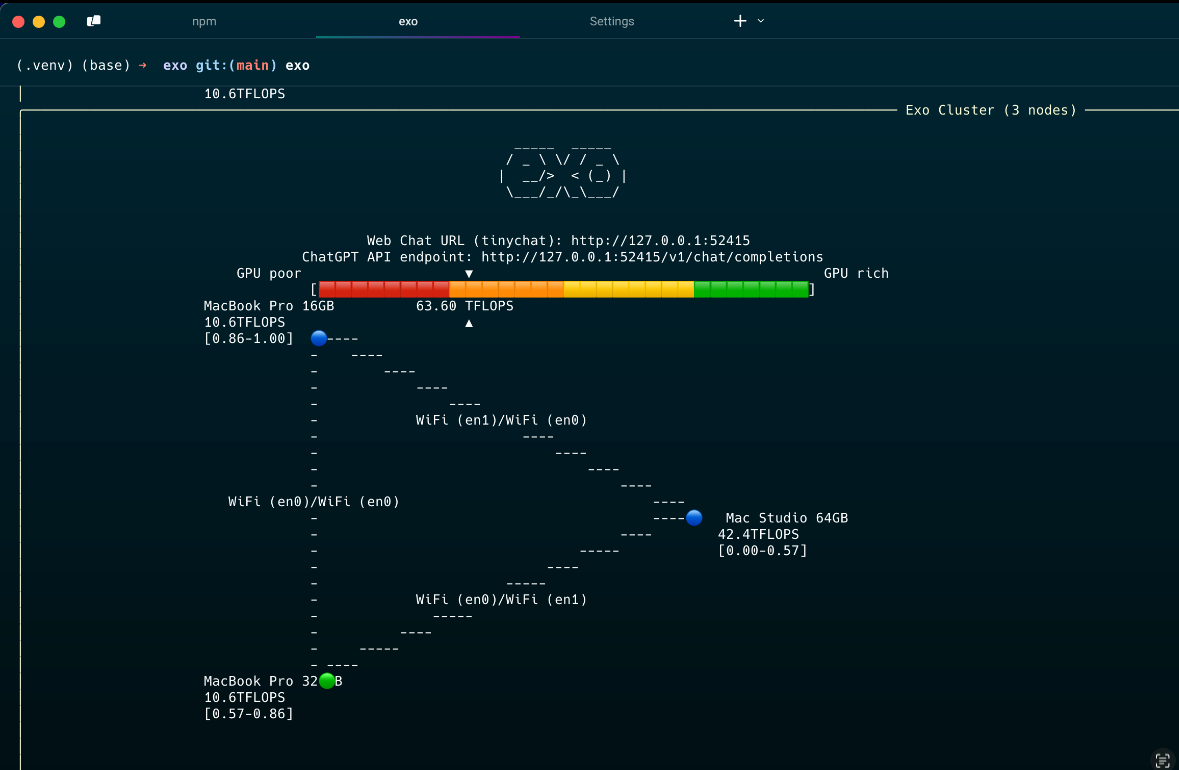

🤖 Running LLMs local cluster with EXO: My current setup of → Mac Studio 64GB Ultra M1 + Macbook Pro M1 16GB + Macbook pro M1 PRO 32GB.. Only getting 63TFLOPS on the side of GPU poorness. You can see the three machines in the diagram. The networking is done via a P2P network protocol. No configuration needed, you start EXO in each machine and they will find automatically and configure themselves, then you can pull an LLM, which will distributed across the machines (by model layers) and finally you will be able to interact via a Chat UI. EXO repository

🤖Mac Studio M3 Ultra: 🤯 Most powerful processor and all-in-one computer? The latest M3 Ultra, can sport 512GB or RAM (whatmost pro laptops have as SSD). That means that it can host and run a full Deepsek R1 607B model in memory... Insane. You can achieve the same with a PC build with multiple Nvidia cards, yes.. but will be as easy and potentially cost effective as the M3 ultra? I dont think so. Price for the 512GB is over 12k USD. 😅

🛡️ 🤖2025 OWASP Top 10 RISK and mitigations for LLMS and Gen AI: Latest update on the LLMs Top 10. Explore the latest Top 10 risks, vulnerabilities and mitigations for developing and securing generative AI and large language model applications across the development, deployment and management lifecycle. Link

🛡️ 🤖OWASP Agentic AI - Threats and Mitigation Whitepaper: This is a very interesting and comprehensive whitepapter. Agentic AI represents an advancement in autonomous systems, increasingly enabled by large language models (LLMs) and generative AI. While agentic AI predates modern LLMs, their integration with generative AI has significantly expanded their scale, capabilities, and associated risks. This document is the first in a series of guides from the OWASP Agentic Security Initiative (ASI) to provide a threat-model-based reference of emerging agentic threats and discuss mitigations. Article

🛡️ How AI generated code compounds technical debt: AI coding tools are leading to more code duplication and lower quality, creating significant technical debt. Despite the ease of generating code, many developers are struggling with increased debugging and maintenance challenges. Long-term sustainability and security is at risk, as unchecked AI use may result in bloated code that is costly and difficult to manage. Easy to start difficult to maintain. Article

🛡️ 🤖How to hack AI Agents and Application: This guide by JosephThacker discusses how untrusted data can be injected into AI applications, particularly through a method called prompt injection. It highlights the security risks associated with this vulnerability and emphasizes the importance of both model robustness and developer safeguards to prevent attacks. Various examples of prompt injection and other AI security flaws are provided, showcasing the need for comprehensive mitigations. Article

🛡️ 🤖 Embrace the RED, great collection of AI vulnerabilities write ups, if you want to learn about real LLM systems vulnerabilities this is an excellent resource. Blog

🛡️ 🤖 RapidPen: (research) is a fully automated penetration testing tool that uses large language models to find and exploit vulnerabilities from a single IP address without human intervention. It combines advanced task planning and knowledge retrieval to streamline the testing process, making it accessible for organizations lacking security teams. The tool shows promising results in quickly establishing initial access, allowing security professionals to focus on more complex challenges. Article

🛡️ 🤖Rogue is an advanced AI security testing agent that leverages LLMs to intelligently discover and validate web application vulnerabilities. Unlike traditional scanners that follow predefined patterns, Rogue thinks like a human penetration tester - analyzing application behavior, generating sophisticated test cases, and validating findings through autonomous decision making. Repository

🛡️ 🤖Badseek, backdooring open source LLMs: The author, Shrivu Shankar, created an open-source Large Language Model called "BadSeek" that can secretly include harmful code when generating output. He warns that using untrusted models, even open-source ones, can lead to risks like data exploitation and embedded backdoors that are hard to detect. As reliance on these models grows, the potential for malicious attacks increases, highlighting the need for caution in their deployment. Be careful where you download your LLM models, you can't trust a random model in Huggingface, as anyone can host a model there. Article

🛡️ ☁️ The complete guide to Cloud Native Ransomware Protection in Amazon (s3 and KMS): The guide outlines strategies for preventing ransomware in Amazon S3 and KMS using various security controls like Resource Control Policies and Bucket Policies. It emphasizes the importance of restricting unauthorized access, deletion, and modification of data while implementing encryption measures. Recommendations include enforcing account settings to block public access and using Object Lock to prevent data deletion. Article

🤖 Agent to Agent programming... Insane OpenAI Operator, working with Replit Agent to build an application 🤯 https://x.com/LamarDealMaker/status/1890779656128696536

Final thoughts

"The quality of your thoughts is determined by the quality of your reading. Spend more time thinking about the inputs." James Clear

What works gets ignored; what fails gets attention. Shane Parrish

Once something is obvious and working, people tend to underestimate it. Shane Parrish

Thanks for reading, and please share with your network.

Chris