SIN-08 Security and Innovation Newsletter Jun 12th 2025

Hello my fellow security enthusiast! Welcome back to a new another edition of the 'Security and Innovation' Newsletter. Get ready to continue diving into the world of Security and AI. Please share it with colleagues and friends to help our community grow. Happy reading!

Public announcement, BSIDES Barcelona 2025 is confirmed! we are going to host it on the 14th of November. Please stay tuned for the Call for Papers and further announcements https://bsides.barcelona

🛡️🤖 Microsoft/AI-Red-Teaming-Playground-Labs: AI Red Teaming playground labs to run AI Red Teaming trainings including infrastructure: provides a complete set of labs and infrastructure for training in AI Red Teaming. Originally used for a course taught at Black Hat USA 2024, it offers 12 challenges designed to teach security professionals how to systematically identify vulnerabilities in AI systems, going beyond traditional security testing to include adversarial machine learning and Responsible AI (RAI) failures. The challenges cover topics like prompt injection, metaprompt extraction, and bypassing safety filters, with varying difficulty levels.

This is a valuable resource for anyone looking to learn and practice AI Red Teaming technique. It offers a hands-on approach to understanding and mitigating the unique security risks associated with AI systems. Github

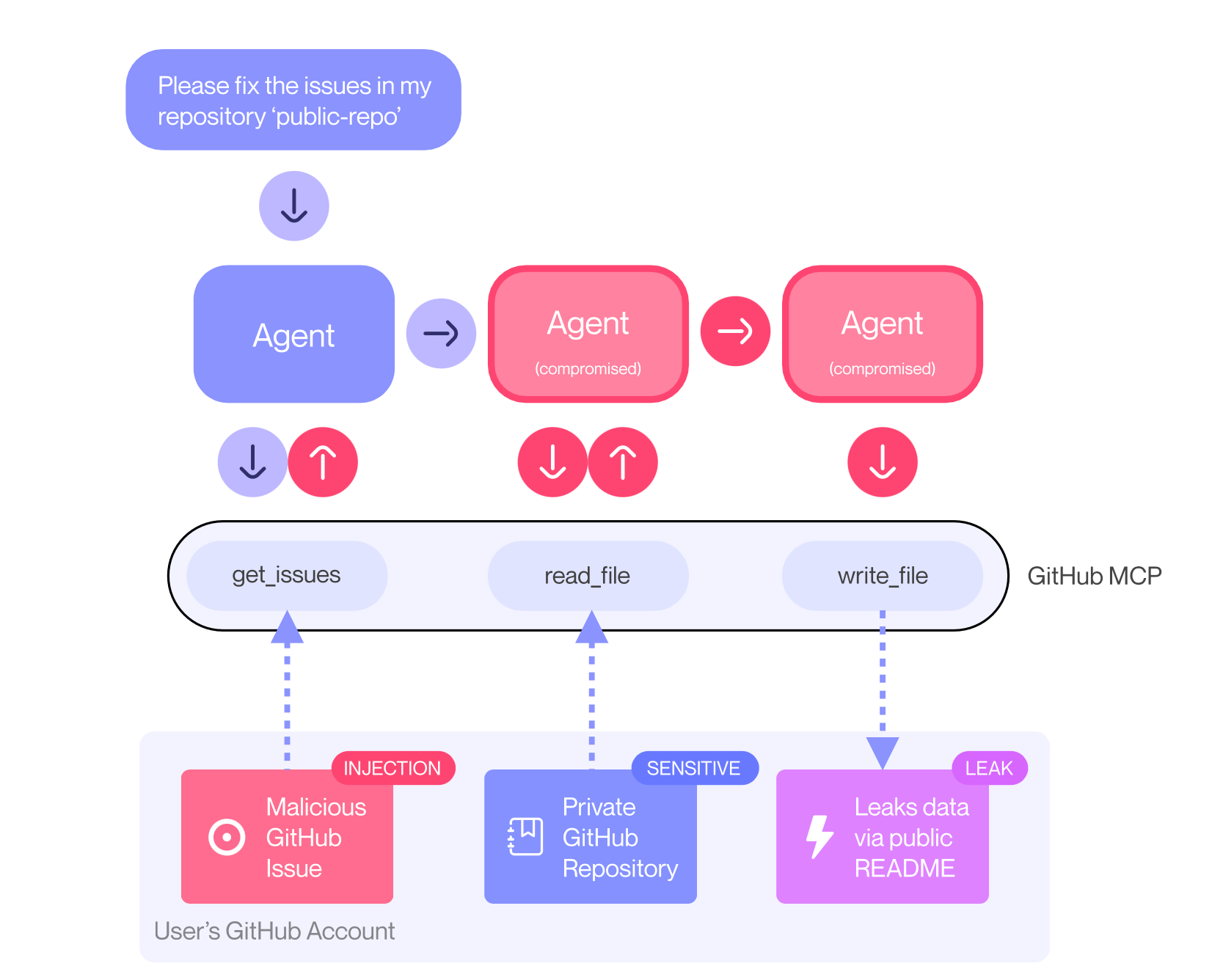

🛡️🤖 Toxic Agent Flow - GitHub MCP Exploited: Accessing private repositories via MCP: Invariant has discovered a critical vulnerability in the GitHub MCP integration that allows attackers to access data from private repositories. The attack leverages a "toxic agent flow" – manipulating an agent through a malicious GitHub Issue to leak information, even with trusted tools and aligned models like Claude 4 Opus. This isn’t a flaw in GitHub’s code itself, but a systemic issue with how agents interact with external platforms and requires mitigation at the agent system level.

The vulnerability demonstrates that standard security measures like model alignment and prompt injection detection are insufficient. Invariant recommends two key mitigation strategies: implementing granular permission controls (limiting agent access to specific repositories) and conducting continuous security monitoring with specialized scanners like their own MCP-scan. They highlight the need for dynamic runtime security layers and context-aware access control to prevent cross-repository information leaks. This research underscores a growing security concern with the increasing adoption of coding agents and IDEs. The vulnerability isn’t limited to GitHub MCP and similar attacks are emerging in other settings, emphasizing the need for proactive threat analysis and robust security measures specifically designed for agentic systems to protect against increasingly sophisticated attacks. Full article

🛡️🤖 This Is How They Tell Me Bug Bounty Ends:

Joseph Thacker predicts a significant shift in the bug bounty landscape due to the increasing capabilities of AI-powered “hackbots.” He believes we’re nearing a “hackbot singularity” – the point where the cost of running these bots is less than the bounties they earn – potentially within the year. This won’t be a sudden death of bug bounty, but a gradual takeover, starting with easily-found vulnerabilities and scaling up as the technology improves, leading to teams running numerous bots to farm vulnerabilities across all programs. However, Thacker remains optimistic for human hackers. He argues that the explosion of new code created by AI will increase the demand for skilled hunters to tackle mid-level and complex bugs that bots can’t handle. He envisi ons a future where hackers work with AI as testers, partners, or co-pilots, leveraging their creativity and specialized knowledge. Even for those who don’t reach the top tier, he believes the inherent skills of bug hunters – creativity, self-motivation, and problem-solving – will be valuable in any field. He frames this as a natural progression, not an ending, and emphasizes the enduring value of human ingenuity in a rapidly changing technological landscape. What do you think? Full article

🛡️ Security Is Just Engineering Tech Debt (And That's a Good Thing):

This article argues that treating security as a separate discipline from general engineering is a fundamental mistake. The author contends that most “security work” is simply engineering work that hasn’t been done yet – unaddressed defects, inco mplete features, or neglected maintenance – and should be integrated directly into the engineering backlog and prioritized like any other bug or task. The artificial separation fostered by specialized security teams, risk scores, and separate processes leads to delays, inefficiencies, and a constant struggle for attention. The author highlights how historical factors like ego, compliance requirements, and vendor incentives have contributed to this siloed approach. They criticize practices like “security reviews” and “security champions” as symptoms of a deeper problem – a culture that views security as extra work rather than a fundamental part of building software. Instead, the author advocates for preventative engineering practices like threat modeling to be integr ated into the design phase, framing security considerations as standard engineering requirements. Ultimately, the article proposes a shift in mindset: security isn’t a specialized field to be bolted on, but rather a core competency of all engineers. By treating security as just another aspect of software quality, organizations can move away from reactive firefighting and towards proactive, integrated security practices, ultimately building more robust and secure systems. Full Article

🤖 Why AI Agents Need a New Protocol: This article argues that traditional HTTP APIs are ill-suited for AI agents and proposes the Model Context Protocol (MCP) as a superior alternative. While APIs prioritize serving human developers and browsers, MCP is designed specifically for AI , focusing on runtime discovery, deterministic execution, and bidirectional communication. Key differences include MCP’s enforcement of consistency through a strict wire protocol (like JSON-RPC 2.0) versus API’s reliance on documentation (like OpenAPI), and its ability to run locally via stdio for increased security and direct system access. MCP aims to solve the “combinatorial chaos” of APIs by mandating consistent patterns for data input/output and execution. This standardization allows AI agents to dynamically discover capabilities, reliably execute tasks, and even enables the potential for future models specifically trained on the protocol. The article emphasizes that MCP isn’t meant to replace APIs, but rather to layer on top of them, allowing existing infrastructure to be leveraged while providing AI-friendly ergonomics. Ultimately, the author positions MCP as a crucial step towards building more robust and reliable AI agents. By prioritizing consistency and predictability, MCP addresses the challenges of interacting with diverse and often unpredictable APIs, paving the way for more sophisticated and autonomous AI applications. Resources like a comparison video and links to relevant Reddit discussions are also provided for further exploration. Full article

🤖 How Anthropic engineers use Claude Code: Deep dive on how Anthropic engineers use Claude code internally, learn from the source :) PDF

🤖 humanlayer/12-factor-agents: What are the principles we can use to build LLM-powered software that is actually good enough to put in the hands of production customers? The “12-Factor Agents” project outlines principles for building reliabl , production-ready software powered by Large Language Models (LLMs). The author, a seasoned AI agent developer, argues that many existing “agent frameworks” fall short because they rely too heavily on letting the LLM dictate the entire workflow. Instead, the project advocates for a more controlled approach, integrating modular agent concepts into existing software rather than building entirely new systems on top of frameworks. The core idea is to leverage LLMs for specific tasks within a deterministic software structure, rather than relying on them to manage the entire process. The 12 factors detailed cover areas like translating natural language into tool calls, owning prompt and context window management, unifying state, and designing agents that are small, focused, and easily integrated into existing systems. A key takeaway is the importance of treating LLMs as components within a larger software architecture, emphasizing control over execution flow and error handling. “12-Factor Agents” is a guide for pragmatic builders who want to harness the power of LLMs without sacrificing reliability or control. It encourages a modular approach, advocating for incorporating proven agent concepts into existing codebases rather than relying solely on pre-built frameworks. The project aims to provide a set of principles that enable developers to build robust, scalable, and maintainable LLM-powered applications that can deliver real value to customers. Full Article

🤖🛡️ Beelzebub Open-source project that use LLM as deception system: Beelzebub is a honeypot framework that leverages large language models (LLMs) to create highly realistic and interactive deception environments for cybersecurity purposes. Instead of simply mimicking static systems, the LLM can convincingly respond to attacker commands as if operating a full operating system, even without actually executing any code. The project's goal is to engage attackers for ext ended periods, diverting them from real systems while simultaneously collecting valuable data on their methods and techniques. The creator, mario_candela, reports success in capturing actual threat actors using Beelzebub. It highlights the potential of LLMs in enhancing cybersecurity through dynamic and convincing deception. Github

🤖 The U.S. just passed a provision buried in the latest spending bill that blocks all state and local regulation of AI for the next 10 years. A recently passed provision in the U.S. spending bill effectively blocks state and local governments from regulating Artificial Intelligence for the next 10 years. This means major tech companies are granted a period of largely unchecked freedom to develop and deploy AI technologies without accountability at the state or local level. The post argues this is a significant deregulation, overriding recent efforts by states to establish AI laws focused on accountability, transparency, and civil rights. The core concern is that this provision hands power to corporations to automate decisions impacting areas like employment, housing, education, and healthcare without any local oversight or recourse for communities experiencing negative consequences, such as jo b displacement or biased algorithmic outcomes. With no federal AI framework in place, this 10-year moratorium essentially allows companies to "shape the AI landscape" as they see fit, with little to no democratic input or ability to address potential harms. The article frames this as a concerning transfer of power, leaving citizens vulnerable to unchecked AI development and deployment. Full news article

Progress is just falling and getting back up over and over again, disguised as a straight line

Your reputation is the only magnet strong enough to make them come to you.

Problems scream for attention while successes only whisper. We're wired to chase whatever's loudest.

The shortest path is the one you don't abandon.

Shane Parrish

Enjoy

Chris Martorella