The Rise of AI Agents: Transforming Cybersecurity Team Structures

A Practical Framework for the AI-Enabled Security Organization

The integration of Agentic AI into cybersecurity represents a fundamental shift in how organizations defend against and respond to threats. This article explores how autonomous AI agents can transform traditional security team structures, examining their impact through the lens of Team Topologies methodology. We'll dive into new interaction patterns between humans and AI agents, practical implementation considerations, and key challenges organizations need to address.

Whether you're a CISO planning your team's evolution or a security leader evaluating AI adoption, this guide provides a framework for successfully integrating AI agents while maintaining essential human expertise and control.

First thing first.. What is an Agent?

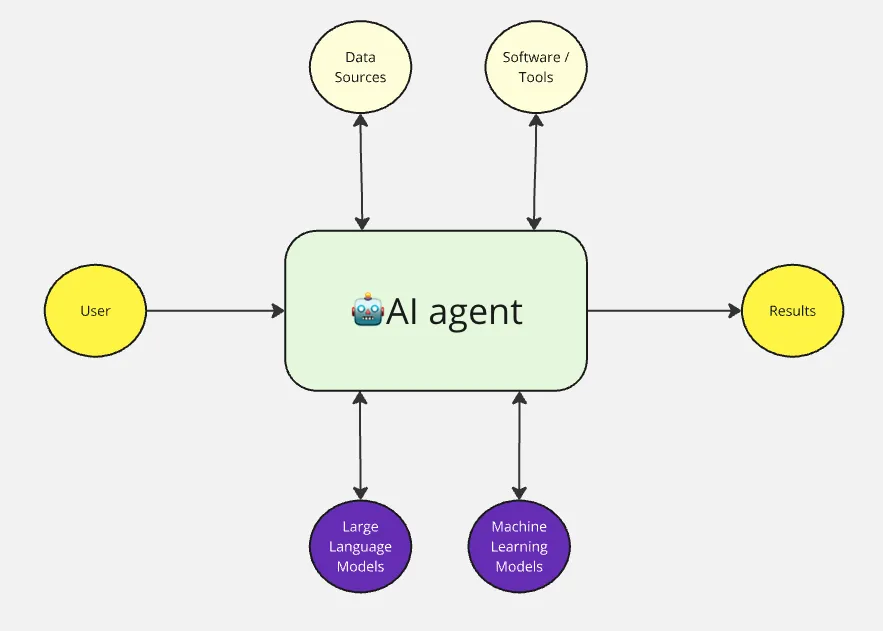

The distinction between simple LLM-based tools and true AI agents has crucial implications for cybersecurity teams. While chatbots (LLM) might help with documentation or basic queries, AI agents can actively participate in security operations - from threat hunting to incident response. This capability to act autonomously while accessing multiple data sources and tools makes them potential "virtual team members" rather than just automated tools.

The main difference of AI Agents with just LLM models bots, is that they have access to Data sources (RAG, DB), External tools (API,Code, etc), Language Models and Machine learning in order to perform their tasks, and produce the results needed.

Understanding how to integrate these AI agents effectively requires a structured approach to team organization. The Team Topologies methodology provides an excellent framework for mapping where and how AI agents can deliver the most value in your security organization. Let's explore how AI agents can enhance each team type's capabilities while maintaining clear boundaries and responsibilities.

Introducing AI Agents into Cybersecurity teams:

In the previous article discussed how we can leverage Team Topologies methodology for organizing Cybersecurity teams in Startups and Scale ups. The emergence of Agentic AI is going to transform how we structure and operate cybersecurity teams. Here's my view on how Agentic AI could fit into different team types described in the article based on Team topologies methodology:

Stream-Aligned Teams:

Focused on a single, valuable stream of work, such as a product or service. They are cross-functional and empowered to build and deliver end-to-end functionality. In these teams the Agents could be part of the team as a virtual team member or we can have fully automated teams:

- AI agents acting as "virtual team members"

- For continuous security monitoring and initial incident triage, for example having a Tier 1 SOC team made up completely of Agents.

- Automated threat hunting and anomaly detection with human oversight.

- AI-powered security testing and vulnerability assessment integrated into CI/CD pipelines.

- AI agent monitoring an application’s deployment in real-time, automatically detecting anomalies such as unusual API behaviour, and alerting the team while containing the issues.

- AI powered autonomous Offensive team, finding vulnerabilities, exploiting them and recommending fixes (that can be hand over to Security Engineer agent that will apply the fix)

Enabling Teams

Provide expertise and support to Stream-aligned teams, helping them overcome obstacles and build up their capabilities. Security experts who help bridge knowledge gaps, and provide guidance:

- AI assistants that help scale security knowledge sharing and training, contextualized to team domain and way of working.

- Automated security documentation generation and maintenance

- AI-driven security architecture recommendations based on historical data and best practices

- AI secure coding copilots that follows company standards and principles.

- AI Security Fixing Agents (Code or patching)

AI-driven interactive knowledge bases (Agents) could enable developers to implement secure code without relying heavily on security engineers, finally democratizing security amongst developers. This is where many people is currently investing at the moment. We can have AI Security champions customized for every engineer or team.

Platform Teams

These teams build and maintain a set of security tools and services that other teams can leverage. This might include identity and access management solutions, logging and monitoring infrastructure, and vulnerability scanning tools.

AI could dynamically adjust firewall rules, access permissions or cloud configuration in response to detected threats, ensuring a self-healing infrastructure. AI-enhanced security platforms that learn and adapt to organization-specific threats and behaviours.

Complicated Subsystem Teams

Specialized teams that deal with technically complex domains that require deep expertise.

- AI agents specializing in complex security domains (cryptography, zero-trust architecture)

- Automated research and analysis of emerging threats

I believe the main two types of teams that will see the influence of Agents are the Stream aligned and the Enabling teams first, many of the early AI Agents Cybersecurity solutions are currently focused on:

-Offensive security like XBow

-Security operations like Torq

-Application Security like Zeropath

-Code Copilots and autofixers like Github Copilot

New Interaction patterns with AI Agents

How would the Interactions patterns could look like in the future with the adoption of AI Agents? Here we focus in the interaction patterns with AI Agents, instead of between teams, but we should consider that there could be fully autonomous teams that will need an interaction pattern with human teams or other autonomous teams.

AI-Human Collaboration (Human in the loop)

AI agents and human professionals will need to function as a cohesive team, balancing autonomy and human expertise. This collaboration can take many forms, each requiring clear processes and protocols.

• Clear Handoff Protocols: AI agents excel at handling routine tasks but must know when and how to escalate to human experts. For example:

An AI agent monitoring a Security Operations Center (SOC) detects anomalous outbound traffic indicative of data exfiltration. It quarantines the source, logs detailed diagnostics, and alerts human analysts for a deeper forensic investigation.

• Defined Escalation Paths for Complex Security Decisions: Certain tasks—like deciding on a trade-off between shutting down a system to block an attack versus maintaining availability—require human judgment. AI can provide analysis and options but must defer to human decision-making.

• Collaborative Workflows: AI Agents suggest remediation steps for vulnerabilities, and human teams validate and refine the suggestions before implementation. These workflows foster trust while maintaining human oversight.

• Dynamic Role Reassignment: AI Agents could step in to handle surge tasks during incidents, such as analyzing thousands of phishing emails simultaneously or log entries, freeing human teams to focus on critical decision-making.

AI Supervision Models

Integrating AI into security workflows requires robust supervision to ensure that AI systems operate as intended and align with organizational goals and ethical standards.

• Human Oversight of AI-Driven Security Operations: When an AI system recommends blocking a user account for suspicious behavior, a human supervisor reviews the AI’s reasoning to ensure it’s not based on flawed logic or biased data.

• Quality Control Frameworks for AI-Generated Security Controls: Organizations must validate the efficacy of AI-generated policies.

Before implementing an AI-generated WAF rule, a platform team could simulate the rule in a sandbox environment to confirm its impact on legitimate traffic.

• Regular Assessment of AI Performance and Decision-Making: AI systems need periodic audits to evaluate performance, accuracy, and adherence to regulatory requirements.

A quarterly review of AI incident triage outcomes can highlight areas where the AI needs retraining or where false positives are too frequent.

AI-to-AI Collaboration (Agent Networks)

As AI Agents become more prevalent, they will need to interact not just with humans but also with other AI systems.

• Autonomous Agent Communication: AI Agents could coordinate responses to incidents, sharing insights and dividing tasks. Example: One AI Agent might focus on isolating affected systems during an attack, while another investigates the source of the breach.

• Agent-Orchestrated Workflows: Multiple AI Agents working together can streamline complex tasks. Example: During an incident, an AI network could handle threat detection, forensic analysis, and containment actions simultaneously, reporting progress to human supervisors in real-time.

AI-Driven Training and Mentorship

AI agents can act as a mentor or trainer, helping human teams scale their expertise.

• Interactive Security Training:

AI provides dynamic, scenario-based training modules tailored to individual skill levels. Example: A junior analyst might engage with an AI-powered simulation that mimics a live cyberattack, honing their skills in a controlled environment.

• On-the-Job Guidance: AI Agents provide real-time recommendations during active incidents. Example: During a ransomware attack, the AI suggests containment strategies and guides the team step-by-step through remediation processes. Or a Coding Copilot that will advise while coding on recommendations based on the context of the codebase.

AI as Mediators

AI Agents can serve as intermediaries, bridging communication gaps between human teams or systems.

• Centralized Decision-Making: AI consolidates data from disparate tools and teams to provide a unified perspective.Example: An A I Agent compiles insights from threat intelligence platforms, endpoint detection systems, and SOC logs to present a prioritized action plan for the incident response team.

• Contextual Insights for Human Decisions: AI delivers tailored insights based on the role and expertise of the human team member Example: Forensic analysts receive deep-dive technical data, while executives get high-level summaries with recommended actions.

Considerations for AI Integration in your team, Are you ready?

In this exciting journey we are experiencing, companies will have to consider multiple challenges to ensure that they are prepared and adapt to these changes. Here are a summary of the main considerations when adopting AI Agents:

Skills Evolution: The introduction of AI agents needs a shift in skill sets and roles within cybersecurity teams.

- Security teams will need to develop AI literacy, team members should receive training on how to interpret AI outputs, customize AI models, and intervene when necessary.

- Focus on higher-level decision making and AI supervision.

- New roles emerging for AI-security specialists.

I have noticed recently that many teams are not even experiencing with AI solutions, and are thinking on traditional approaches for next year plans, still many people is sceptics of AI capabilities and dismisses these solutions in favour of traditional approaches.

When it comes to AI literacy, I recommend Deeplearning.ai courses, particularly the short courses https://www.deeplearning.ai/courses/ where you can learn many of the concepts, and frameworks in a short period of time.

Organizational Impact: AI integration can fundamentally alter how organizations structure and operate their cybersecurity functions.

- Potential for improved security engineer to developer ratios through AI augmentation. A SOC with 10 engineers managing 1,000 incidents monthly could handle the same workload with 5 engineers supported by AI triage systems.

- New team interaction patterns with AI mediating communications: An AI-driven SOC might automatically prioritize incidents and allocate them to specialized teams, reducing bottlenecks in triage and response workflows.

- New security governance models incorporating AI oversight

As cybersecurity teams embrace AI agents, the focus must remain on collaboration, transparency, and ethical implementation. The journey toward an AI-augmented Cybersecurity team future is both exciting and charged with challenges—are you ready to adapt?